Posts: 15

Threads: 5

Joined: Apr 2021

Reputation:

0

Obi Softbody's surface blueprint generation seems to do a good job on high-poly models with consistent vertex density, such as the example models of the dragon or the bunny. Unfortunately, it has big problems with any reasonably optimised models.

The main problem is that when generating a surface blueprint for a mesh, Obi is fundamentally limited to generating a maximum of one particle per mesh vertex. If the model has vertices that are not regularly spaced, then you run into problems when setting the Cluster Radius. The areas of the model with more densely packed vertices become overconnected for any given cluster radius value, and less dense areas become underconnected or even disconnected.

I wanted to see what can be done to combat this, so I just have a few questions if anyone knows:

1) Is it possible to generate intermediate particles on edges or faces rather than only on vertices?

2) Is there a better method for connecting particles than cluster radius?

3) Is it possible to limit the particles to the closest 3-4 connections to prevent over-connection?

Posts: 5

Threads: 1

Joined: Jan 2021

Reputation:

0

The way that I have solved this is by generating the blueprint with a different mesh with a vertex where each particle should be. The mesh used to create the blueprint and the mesh that actually deforms in the scene do not need to be the same!

Posts: 15

Threads: 5

Joined: Apr 2021

Reputation:

0

(22-05-2021, 07:43 PM)NathanBrower Wrote: The way that I have solved this is by generating the blueprint with a different mesh with a vertex where each particle should be. The mesh used to create the blueprint and the mesh that actually deforms in the scene do not need to be the same!

Interesting, that gives you full control over the particle distribution. An automated solution would be a better workflow for us, and the fact that the mesh used to create the blueprint can be different from the mesh deformed suggests generating new intermediary particles would be fine. It seems to match up the particles to only nearby vertices so the intermediate particles may not be actually used for positioning, but they would impact the simulation.

I might write an alternative surface blueprint generator that does this. Maybe also experiment with new methods for connecting particles with neighbours, as distance isn't always producing good results.

Posts: 6,624

Threads: 27

Joined: Jun 2017

Reputation:

432

Obi Owner:

Hi,

Chiming in to give some details about how this works and where it is going.

Quote: The mesh used to create the blueprint and the mesh that actually deforms in the scene do not need to be the same! Sonrisa

[...]

Interesting, that gives you full control over the particle distribution.

Indeed! that's the whole point behind decoupling simulation and rendering, and why ObiSoftbodySkinner and ObiSoftbody are separate components. You can use mesh A to generate the softbody blueprint, then skin mesh B to it. You can even skin multiple meshes to the same softbody, by using multiple ObiSoftbodySkinners.

Quote:It seems to match up the particles to only nearby vertices so the intermediate particles may not be actually used for positioning, but they would impact the simulation.

When generating a blueprint, at most one particle per vertex in the input mesh is created, as long as it does not overlap too much with another already existing particle.

At runtime, there's no need for particles and vertices to have a 1 to 1 relationship. Mesh rendering is performed using linear blend skinning, so each particle acts as a bone would in a traditional skeletally animated character. Particles can be placed literally anywhere.

Quote: might write an alternative surface blueprint generator that does this. Maybe also experiment with new methods for connecting particles with neighbours, as distance isn't always producing good results.

You can write your own blueprint generators if you wish  . I'm currently writing a blueprint generator that uses an intermediate voxelized representation of the input mesh, similar to volume softbodies do and uses it for connecting particles.

- Generates a voxelization of the mesh.

- Generates one particle per voxel, particles in the surface of the mesh, or both (like volume and surface softbodies do)

- For clusters (connections) it uses geodesic distances calculated using voxels. This results in much higher quality connections in concave meshes, specially in parts of the mesh that are spatially close but don't belong to the same region (character legs, fingers, etc).

Posts: 15

Threads: 5

Joined: Apr 2021

Reputation:

0

31-05-2021, 03:20 AM

(This post was last modified: 31-05-2021, 03:21 AM by Nyphur.)

(29-05-2021, 08:24 AM)josemendez Wrote: You can write your own blueprint generators if you wish  . I'm currently writing a blueprint generator that uses an intermediate voxelized representation of the input mesh, similar to volume softbodies do and uses it for connecting particles. . I'm currently writing a blueprint generator that uses an intermediate voxelized representation of the input mesh, similar to volume softbodies do and uses it for connecting particles.

- Generates a voxelization of the mesh.

- Generates one particle per voxel, particles in the surface of the mesh, or both (like volume and surface softbodies do)

- For clusters (connections) it uses geodesic distances calculated using voxels. This results in much higher quality connections in concave meshes, specially in parts of the mesh that are spatially close but don't belong to the same region (character legs, fingers, etc).

That'd be really cool, like a best-of-both approach where it can use both surface and voxel representations? Does it prefer surface vertices and use voxels to fill in the gaps?

My thought for surface blueprints was just to create a second generation of vertices in the middle of any face or edge larger than the particle size. We could just keep subdividing the surface until the distance between vertices is less than the particle length, then we have a continuous density of particles but it's still only using the surface geometry and there's no need for internal particles or voxelisation.

Posts: 6,624

Threads: 27

Joined: Jun 2017

Reputation:

432

Obi Owner:

31-05-2021, 08:04 AM

(This post was last modified: 31-05-2021, 08:05 AM by josemendez.)

(31-05-2021, 03:20 AM)Nyphur Wrote: That'd be really cool, like a best-of-both approach where it can use both surface and voxel representations? Does it prefer surface vertices and use voxels to fill in the gaps?

Works more or less like this:

- Any voxels overlapped by a mesh triangle are marked as a "surface" voxel.

- The exterior or the voxels is flood-filled and marked as "exterior" voxels, think of this like a cast of the model.

- All remaining "empty" voxels are interior to the mesh, so we mark them as "interior".

(The rationale behind flood filling the exterior of the model instead of the interior is that this handles disconnected parts of the model automatically)

Volume particles are generated for the interior voxels only, "surface" voxels are ignored. Then, the surface of the mesh is sampled as usual (one particle per vertex, unless they overlap).

You can select 3 sampling methods: surface only, volume only (in this case, surface voxels do generate particles), or both.

(31-05-2021, 03:20 AM)Nyphur Wrote: My thought for surface blueprints was just to create a second generation of vertices in the middle of any face or edge larger than the particle size. We could just keep subdividing the surface until the distance between vertices is less than the particle length, then we have a continuous density of particles but it's still only using the surface geometry and there's no need for internal particles or voxelisation.

Imho, there's not much interest in using more particles than vertices for surface sampling. The reason is that the extra particles wouldn't aid much regarding deformation quality (skinning quality depends only on how many vertices your mesh has, and how many bone influences per vertex), collision detection, or performance (more particles = worse performance).

Maybe I'm not aware of a particular use case that would benefit from this?

Posts: 15

Threads: 5

Joined: Apr 2021

Reputation:

0

01-06-2021, 04:16 PM

(This post was last modified: 01-06-2021, 04:24 PM by Nyphur.)

(31-05-2021, 08:04 AM)josemendez Wrote: Imho, there's not much interest in using more particles than vertices for surface sampling. The reason is that the extra particles wouldn't aid much regarding deformation quality (skinning quality depends only on how many vertices your mesh has, and how many bone influences per vertex), collision detection, or performance (more particles = worse performance).

Maybe I'm not aware of a particular use case that would benefit from this?

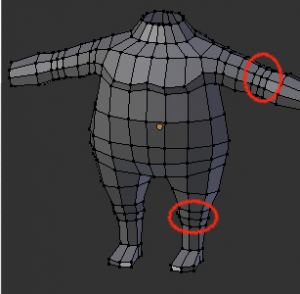

It's a solution to a problem with Obi Softbody's surface blueprint generator. Surface blueprints only work well with organic models that have consistent vertex density across the entire model. I've attached a screenshot showing the geometry of the Barrel, Dragon, and Prism model from the sample folder. Look how regularly spaced all the vertices are on these models, they're designed to work well with Obi. It's very easy to generate surface blueprints with regular particle spacing using these and so connecting particles based on distance works very well.

The problem is that real game assets aren't usually built like this, they're optimised and vertex density varies from one part of the model to another. As a result, the surface blueprints generated can have big gaps and the particles won't be able to connect properly using distance. That's why I was thinking that either intermediate particles need to be created or there needs to be a much better way of connecting particles to neighbours than using distance.

Posts: 6,624

Threads: 27

Joined: Jun 2017

Reputation:

432

Obi Owner:

02-06-2021, 08:20 AM

(This post was last modified: 08-06-2021, 11:16 AM by josemendez.)

(01-06-2021, 04:16 PM)Nyphur Wrote: Look how regularly spaced all the vertices are on these models, they're designed to work well with Obi.

They're designed for good deformation, be it using Obi or otherwise (skeletal skinning, procedural shape modifiers, blend shapes, or any other kind of deformation).

(01-06-2021, 04:16 PM)Nyphur Wrote: The problem is that real game assets aren't usually built like this, they're optimised and vertex density varies from one part of the model to another.

Real game assets that are intended to deform *are* built like this, simply because it's impossible to deform them otherwise. Models are made of triangles and it's mathematically impossible to bend a single triangle, so you need many. Once you want part of a model to deform in any meaningful way you need to add more triangles and hence, more vertices.

This is why game characters have more edge loops & vertices around joints (shoulders, elbows, knees, etc): so that they can be deformed properly by the skeleton:

If you had to deform any part of the character and not just the joints, you'd place edge loops everywhere and end up with homogeneous vertex density. Of course, most pre-made game assets you can find online aren't designed with deformation in mind: vertex distribution is "optimized" for visuals only.

(01-06-2021, 04:16 PM)Nyphur Wrote: As a result, the surface blueprints generated can have big gaps and the particles won't be able to connect properly using distance. That's why I was thinking that either intermediate particles need to be created or there needs to be a much better way of connecting particles to neighbours than using distance.

What's the point of adding and connecting more particles, if there's no vertices to be deformed by them? Take for instance Unity's built-in capsule shape:

Captura de pantalla 2021-06-02 a las 9.16.52.png

Captura de pantalla 2021-06-02 a las 9.16.52.png (Size: 61.02 KB / Downloads: 22)

If you wanted to bend it 90º (I used Blender's deform modifier here) this is what you'd get:

Captura de pantalla 2021-06-02 a las 9.17.12.png

Captura de pantalla 2021-06-02 a las 9.17.12.png (Size: 87.45 KB / Downloads: 22)

Doesn't look good, does it?. No matter how many particles you add in the mid section of the capsule, the result will look terrible since the mesh doesn't have enough vertices in that region. This mesh isn't supposed to be deformed as-is. If you want decent deformation by any means, you need to add vertices in there:

Captura de pantalla 2021-06-02 a las 9.17.48.png

Captura de pantalla 2021-06-02 a las 9.17.48.png (Size: 93.61 KB / Downloads: 22)

For a model to be deformable, it has to have proper topology and enough vertex density. This not exclusive to Obi but just how geometry works: having more deformation targets -particles in Obi, but also lattice points, skeleton bones, clusters, spline control points, etc... in other deformation systems- than vertices in a mesh doesn't make sense in any case than I can think of. You usually want the opposite: less bones than vertices, less lattice points than vertices, less particles than vertices, etc.

It's like trying to get a good flag simulation from a single quad: you simply can't, unless you add more vertices.

Posts: 15

Threads: 5

Joined: Apr 2021

Reputation:

0

Thanks so much jose, I appreciate all of the information and the patience you've shown explaining these concepts to me. It definitely helps me understand how the tool is designed to work. I don't think I'm explaining what my problem is properly though, and what I'm trying to do with the tool.

(02-06-2021, 08:20 AM)josemendez Wrote: What's the point of adding and connecting more particles, if there's no vertices to be deformed by them? Take for instance Unity's built-in capsule shape:

If you wanted to bend it 90º (I used Blender's deform modifier here) this is what you'd get:

Doesn't look good, does it?. No matter how many particles you add in the mid section of the capsule, the result will look terrible since the mesh doesn't have enough vertices in that region.

OK, this is where I think the misunderstanding is. This example with the capsule actually is what I'm trying to achieve. The capsule example is pretty extreme since it has that huge relative gap between vertices and that 90 degree deformation. Most models won't be as bad as this, and not every use case necessarily needs a smooth deformable softbody.

Say we use a regular Unity capsule mesh like your example. If we set the cluster radius correctly so that the top area connects together well and the bottom area connects together well, the top and bottom will not be connected to each other. You effectively get two separate softbodies flopping on the floor with triangles trailing between them. But if you set the Cluster Radius high enough to connect the top to the bottom, the entire top area and bottom area will be way over-connected.

What I want is to be able to connect the top and bottom parts somehow without overconnecting everything else. That's why I suggested maybe a new way of connecting particles (other than cluster radius) or maybe generating intermediary particles to get around that problem. I know that the end result wouldn't look good if we're deforming it as you showed in your helpful example. But wouldn't it still do a decent job of impact deformation like your barrel example? And wouldn't the particles still collide with other softbodies even if they aren't influencing vertices in the mesh?

(02-06-2021, 08:20 AM)josemendez Wrote: What's the point of adding and connecting more particles, if there's no vertices to be deformed by them? To come back to this point, maybe I'm misunderstanding the tool but doesn't Obi Softbody simulate collision and stuff too? The idea with adding those extra vertices isn't to improve the deformation (because obviously it won't, there are no more vertices to deform). It's to fill in the gaps so we have regularly spaced particles, both so we can connect the particles together easily so that other softbodies on the solver can collide/interact with it well. Is that the wrong way to do this?

I can always just go back to my artists and get them to subdivide the models we're planning to use with Obi Softbody so that the blueprints will generate and connect well, but if there's an alternative that would be a good step to skip.

Posts: 6,624

Threads: 27

Joined: Jun 2017

Reputation:

432

Obi Owner:

11-06-2021, 08:00 AM

(This post was last modified: 11-06-2021, 09:50 AM by josemendez.)

(11-06-2021, 12:31 AM)Nyphur Wrote: Thanks so much jose, I appreciate all of the information and the patience you've shown explaining these concepts to me.

No worries, that's what I'm here for

(11-06-2021, 12:31 AM)Nyphur Wrote: What I want is to be able to connect the top and bottom parts somehow without overconnecting everything else. That's why I suggested maybe a new way of connecting particles (other than cluster radius)

In that case, you'd have soft caps (bottom and top of the capsule) and a completely rigid mid-section that doesn't react to collisions (since there's no particles to collide). Colliders and other softbodies would pass right trough the mid part of the capsule.

(11-06-2021, 12:31 AM)Nyphur Wrote: maybe generating intermediary particles to get around that problem. I know that the end result wouldn't look good if we're deforming it as you showed in your helpful example. But wouldn't it still do a decent job of impact deformation like your barrel example?

Generating intermediary particles is ok, but that you can already do by using a subdivided mesh to generate the blueprint and skinning the original, unsubdivided mesh to it. However if you already have a subdivided mesh for particle generation, what's the point in using the original, unsubdivided mesh for skinning? (which will look worse). You usually do it the other way around: use a low-quality mesh to generate particles, then skin a high-quality mesh to it.

(11-06-2021, 12:31 AM)Nyphur Wrote: To come back to this point, maybe I'm misunderstanding the tool but doesn't Obi Softbody simulate collision and stuff too? The idea with adding those extra vertices isn't to improve the deformation (because obviously it won't, there are no more vertices to deform). It's to fill in the gaps so we have regularly spaced particles, both so we can connect the particles together easily so that other softbodies on the solver can collide/interact with it well. Is that the wrong way to do this? [...]wouldn't it still do a decent job of impact deformation like your barrel example?

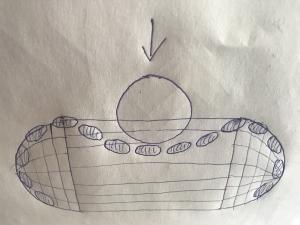

Yes, having denser particle sampling in regions with no/few vertices would allow for collision detection. However collisions *always* cause deformation since they push particles around (impact deformation, as you describe it), so objects would clip trough and look wrong: colliders & other softbodies would sink into the mesh when colliding, as the particles deform but the mesh doesn't. Sketched it for you (the sphere pushing from above would be a collider, and I've only drawn the relevant particles):

Only case where deformation would not happen as result of collision would be a completely rigid softbody. But if you have a completely rigid softbody, you might as well be using a rigidbody which is a lot cheaper to simulate and will look much better.

Softbody = deformable body = you need vertices for good deformation. What makes sense in pretty much all cases (and what all physics engines do) is to have a high-quality mesh for visuals and a low-quality, simplified physics representation of it, not the other way around. Thats' why blueprints start with one particle per vertex -max quality-, and can only go down from there -proggressively lower quality-.

If you *really* wanted this for some reason (w/ collision clipping and derpy deformation), it would be possible to do it by:

1.- voxelizing only the surface of the mesh, projecting the resulting particles to the actual mesh surface. This gives good particle coverage regardless of mesh topology.

2.- generate clusters by distance as usual.

3.- profit!

This is basically a blend of volume and surface blueprints, I can get it running for you and have it ready around next monday/tuesday. Let me know if you're interested in it.

|