Posts: 10

Threads: 2

Joined: Jul 2019

Reputation:

0

Hi!

When I have a fluid simulation and enable/disable an ObiCollider it's incredibly slow for a few frames (the game can be stuck 3 seconds in the editor. Less bad on device but still bad). I nailed it to the fact that every change in collider structure requires some sort of mega flush to Oni. It does the same when creating ObiRigidbodies at runtime, destroying ObiColliders at run-time etc

It makes the game unusable in pretty random instances, is there anything we can do?

We are using Oni backend, Unity 2019.4.22f1, Obi Fluid 6.0.1. Tested on both Mac (editor) and iOS (on both SE and SE 2020, iPhone 11), same result every time.

Please help thanks!

Posts: 6,322

Threads: 24

Joined: Jun 2017

Reputation:

400

Obi Owner:

30-03-2021, 09:37 AM

(This post was last modified: 30-03-2021, 09:43 AM by josemendez.)

(30-03-2021, 09:30 AM)sdpgames Wrote: Hi!

When I have a fluid simulation and enable/disable an ObiCollider it's incredibly slow for a few frames (the game can be stuck 3 seconds in the editor. Less bad on device but still bad). I nailed it to the fact that every change in collider structure requires some sort of mega flush to Oni. It does the same when creating ObiRigidbodies at runtime, destroying ObiColliders at run-time etc

It makes the game unusable in pretty random instances, is there anything we can do?

We are using Oni backend, Unity 2019.4.22f1, Obi Fluid 6.0.1. Tested on both Mac (editor) and iOS (on both SE and SE 2020, iPhone 11), same result every time.

Please help thanks!

Hi there,

I'm unable to reproduce this. Enabling or disabling a collider should only affect that individual collider, there's no "mega flush" of any kind taking place.

What is done when creating (or activating) a collider is just appending its data to a collider list. When removing (or deactivating) a collider, its data is swapped with the last entry in the collider list, and the list size reduced by 1. So both operations have a O(1) cost, should be extremely cheap. The collider list (as well as the rigidbodies list) are memory mapped, so passing them to Oni just copies a pointer, not the entire list. You can check the implementation in ObiColliderWorld.cs.

- How many colliders are there in the scene?

- What kinds of colliders are we talking about? (primitives, or meshcolliders/distance fields?)

- Could you share a profiler screenshot showing this performance drop you're describing?

kind regards

Posts: 10

Threads: 2

Joined: Jul 2019

Reputation:

0

30-03-2021, 10:20 AM

(This post was last modified: 30-03-2021, 10:42 AM by sdpgames.)

That was fast cool!

We're using a few hundred colliders, mesh colliders, convex. I know what you think  it works! it works fine and fast and great, 99.9% of the time. and then BOOOM

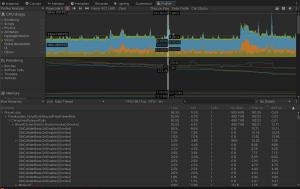

See the screenshot, I could catch it it's quite hard to catch on the profiler so I was a bit lucky there

Edit: the mega flush I'm talking about is

for (int i = 0; i < implementations.Count; ++i)

{

implementations[i].SetColliders(colliderShapes, colliderAabbs, colliderTransforms, 0);

implementations[i].UpdateWorld(0);

}

It seems the UpdateWorld is some sort of flush and is called n^2 when destroying a batch of colliders. I could be wrong, just my thought

Second update: I drew the time for each collider destruction to the next (faster one) from the profiler % info

It's not 1/n^2 exactly but it doesn't look linear to the number of colliders being destroyed

Posts: 6,322

Threads: 24

Joined: Jun 2017

Reputation:

400

Obi Owner:

30-03-2021, 01:06 PM

(This post was last modified: 30-03-2021, 01:07 PM by josemendez.)

(30-03-2021, 10:20 AM)sdpgames Wrote: That was fast cool!

We're using a few hundred colliders, mesh colliders, convex. I know what you think  it works! it works fine and fast and great, 99.9% of the time. and then BOOOM it works! it works fine and fast and great, 99.9% of the time. and then BOOOM

See the screenshot, I could catch it it's quite hard to catch on the profiler so I was a bit lucky there

Edit: the mega flush I'm talking about is

for (int i = 0; i < implementations.Count; ++i)

{

implementations[i].SetColliders(colliderShapes, colliderAabbs, colliderTransforms, 0);

implementations[i].UpdateWorld(0);

}

It seems the UpdateWorld is some sort of flush and is called n^2 when destroying a batch of colliders. I could be wrong, just my thought

Second update: I drew the time for each collider destruction to the next (faster one) from the profiler % info

It's not 1/n^2 exactly but it doesn't look linear to the number of colliders being destroyed

Hi,

Will try to reproduce this and get back to you. the UpdateWorld method looks at the end of the list (newly added or removed colliders), then adds or removed them to/from a spatial partitioning structure. We use a multilevel hash grid, so insertions and deletions should be done in constant time too. If you disable 10 colliders, then 10 deletions from a hashmap will be performed next UpdateWorld is called.

Posts: 10

Threads: 2

Joined: Jul 2019

Reputation:

0

(30-03-2021, 01:06 PM)josemendez Wrote: Hi,

Will try to reproduce this and get back to you. the UpdateWorld method looks at the end of the list (newly added or removed colliders), then adds or removed them to/from a spatial partitioning structure. We use a multilevel hash grid, so insertions and deletions should be done in constant time too. If you disable 10 colliders, then 10 deletions from a hashmap will be performed next UpdateWorld is called.

Sounds good. In the meantime I found a workaround, to scale each object I want to disable to a "close to zero" scale. It kinda works for the time being and we see good performance all throughout

Posts: 6,322

Threads: 24

Joined: Jun 2017

Reputation:

400

Obi Owner:

31-03-2021, 10:22 AM

(This post was last modified: 31-03-2021, 10:23 AM by josemendez.)

(30-03-2021, 02:56 PM)sdpgames Wrote: Sounds good. In the meantime I found a workaround, to scale each object I want to disable to a "close to zero" scale. It kinda works for the time being and we see good performance all throughout

Did some stress/performance tests, but still unable to trigger this. The fact that scaling objects close to zero improves performance is a hint, though. When inserted/removed from the multilevel grid, the size of the collider's bounds determines the level of the grid it will be inserted into. Larger colliders are inserted into coarser grids, smaller ones into finer grids. On average, a typical collider is inserted in only 8 cells of whatever grid level it is assigned to.

There's minimum and maximum levels tough, so extremely large colliders may need to be inserted into many grid cells at the top grid level. This could explain the behavior you're getting, as the algorithmic cost for inserting a *really* large collider is still constant, but the constant factor is large.

Still, this might not be your case. Just trying to reason about this. Any more details you can give about the kind of colliders you're using? (if meshcolliders, do they have many triangles? are they very large or really small in world space? etc)

Posts: 10

Threads: 2

Joined: Jul 2019

Reputation:

0

(31-03-2021, 10:22 AM)josemendez Wrote: Did some stress/performance tests, but still unable to trigger this. The fact that scaling objects close to zero improves performance is a hint, though. When inserted/removed from the multilevel grid, the size of the collider's bounds determines the level of the grid it will be inserted into. Larger colliders are inserted into coarser grids, smaller ones into finer grids. On average, a typical collider is inserted in only 8 cells of whatever grid level it is assigned to.

There's minimum and maximum levels tough, so extremely large colliders may need to be inserted into many grid cells at the top grid level. This could explain the behavior you're getting, as the algorithmic cost for inserting a *really* large collider is still constant, but the constant factor is large.

Still, this might not be your case. Just trying to reason about this. Any more details you can give about the kind of colliders you're using? (if meshcolliders, do they have many triangles? are they very large or really small in world space? etc)

That's true that our world has a peculiar setup in that respect, it's very small. The whole scene is around 2mx2m. We did that because it's the way the water looks the best. Scaling the water by changing the setting didn't work for us we had many issues, it was much simpler to scale it down like that.

The objects have very small poly count, they are voronoi shatter pieces of simple shapes such as a box (so not only the collider is convex but the actual mesh is built that way too).

|

it works! it works fine and fast and great, 99.9% of the time. and then BOOOM

it works! it works fine and fast and great, 99.9% of the time. and then BOOOM